Magic Cache for GitHub Actions

How to use RunsOn Magic Cache to speed up your builds and get unlimited cache on GitHub Actions runners, by swapping the cache backend to S3.

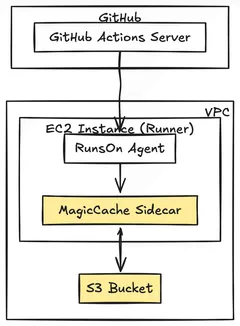

RunsOn Magic Cache is a feature that allows you to transparently switch the GitHub Actions caching backend to use the local, fast, and unlimited S3 cache backend:

- Speed: 5x faster than the official GitHub Actions cache.

- Unlimited: Unlimited cache size, items will be evicted based on the cache eviction policy defined in your RunsOn stack (default: 10 days).

- Local: The cache is stored locally in your VPC, so it’s fast, reliable, and secure.

To enable the Magic Cache, you must opt-in to it by setting the extras=s3-cache job label. By default, the Magic Cache is disabled.

Use cases

Section titled “Use cases”- Transparent accelerator for

actions/cache-related actions (including language-specific actions). - Transparent accelerator for the

type=ghabuildkit exporter when building Docker images.

How it works

Section titled “How it works”When the magic cache is enabled, the RunsOn agent will start a sidecar cache backend on the runner, and direct the various caching actions (including the gha cache backend for Docker Buildx) to send caching requests to the sidecar.

The sidecar will then transparently forward the requests to the S3 cache backend, and return the cache hits back to the caching actions.

This means that you can use the same caching configuration for your builds, regardless of whether you’re using the official GitHub Actions cache or the S3 cache.

How to use

Section titled “How to use”Using the magic cache is simple:

- Set an additional job label:

extras=s3-cache. - Add the

runs-on/action@v2↗ action to your job. - Use the normal caching actions as before.

Note that when running on official GitHub Actions runners, the runs-on/action@v2 action will just be a no-op, so it’s fine to keep in your workflows even if you mix official and RunsOn runners. This action will also soon be used to configure more advanced aspects of the runner, like CloudWatch monitoring, SSM agent enablement, etc.

Accelerate actions/cache

Section titled “Accelerate actions/cache”As an example, the workflow below will compare the speed of the magic cache vs official runners for multiple cache sizes by generating a random file, saving it to the cache, and then restoring it from the cache. It uses the official actions/cache action to save and restore the cache, but it should also work with any language-specific caching actions (e.g. actions/setup-node, ruby/setup-ruby, etc.).

jobs: test-magic-cache-speeds: strategy: fail-fast: false matrix: runner: - runs-on=${{github.run_id}}/runner=2cpu-linux-x64/extras=s3-cache - ubuntu-latest blocks: - 4096 # 4GB - 2048 # 2GB - 512 # 512MB - 64 # 64MB runs-on: ${{ matrix.runner }} env: FILENAME: random-file steps: - uses: runs-on/action@v2 - name: Generate file run: | echo "Generating ${{ matrix.blocks }}MiB random file..." dd if=/dev/urandom of=${{ env.FILENAME }} bs=1M count=${{ matrix.blocks }} ls -lh ${{ env.FILENAME }} - name: Save to cache (actions/cache) uses: actions/cache/save@v4 with: path: ${{ env.FILENAME }} key: github-${{github.run_id}}-actions-cache-${{strategy.job-index}}-${{ matrix.blocks }}MiB-${{ env.FILENAME }} - name: Restore from cache (actions/cache) uses: actions/cache/restore@v4 with: path: ${{ env.FILENAME }} key: github-${{github.run_id}}-actions-cache-${{strategy.job-index}}-${{ matrix.blocks }}MiB-${{ env.FILENAME }} - name: Restore from cache (actions/cache, restoreKeys) uses: actions/cache/restore@v4 with: path: ${{ env.FILENAME }} key: github-${{github.run_id}}-actions-cache-${{strategy.job-index}}-unknown restore-keys: | github-${{github.run_id}}-actions-cache-${{strategy.job-index}}-${{ matrix.blocks }}MiB-Accelerate docker builds

Section titled “Accelerate docker builds”Same as above, use extras=s3-cache and runs-on/action@v2, and use the gha cache backend for Docker Buildx, as you would with the official GitHub Actions runners. Your docker layers will transparently get stored in your local S3 bucket.

jobs: docker: strategy: fail-fast: false matrix: runner: - runs-on=${{github.run_id}}/runner=2cpu-linux-x64/extras=s3-cache - ubuntu-latest runs-on: ${{ matrix.runner }} steps: - uses: runs-on/action@v2 - uses: actions/checkout@v6 with: repository: dockersamples/example-voting-app - name: Generate random file to test caching run: | dd if=/dev/urandom of=vote/random.bin bs=1M count=1024 ls -lh vote/random.bin - name: Set up Docker Buildx uses: docker/setup-buildx-action@v3 - name: "Build and push image (type=gha)" uses: docker/build-push-action@v4 with: context: "vote" push: false tags: test cache-to: type=gha,mode=max cache-from: type=ghaSpeed depends on file size and instance type, but the larger the files and the larger the instance type, the faster (up to 5x compared to official runners) it will be for saving and restoring.

The cache is UNLIMITED, items will be evicted based on the cache eviction policy defined in your RunsOn stack (default: 10 days).

The cache mechanism is free, and the bandwidth (ingress/egress) is also free, since everything stays within your VPC, and the VPC has a (free) S3 gateway attached. You only pay for S3 storage.

Limitations

Section titled “Limitations”-

The magic cache is only available on Linux runners for now.

-

Currently, the EC2 instance role assigned to the runners has full access to the entire cache bucket, so make sure you are fine with the lack of isolation across repositories handled by RunsOn if you want to use this cache. While this might actually be a desirable feature for certain use cases, future versions will restrict cache access to only the current repository.

-

The magic cache is opt-in only, and you need to add the

runs-on/action@v2action to your jobs.

Common issues

Section titled “Common issues”actions/upload-artifact compatibility

Section titled “actions/upload-artifact compatibility”If you have enabled the s3-cache extra, and you are using the actions/upload-artifact@v4 action in your workflows, you must ensure that you have also included the runs-on/action@v2 action in your jobs.

If you don’t, you might see an error like this:

Attempt 1 of 5 failed with error: Unexpected token 'O', "Original A"... is not valid JSON. Retrying request in 3000 ms...