S3 cache for GitHub Actions

GitHub offers a default cache ↗ for actions, that can be used in the following way:

steps: - uses: actions/checkout@v4

- uses: actions/cache@v4 with: path: path/to/dependencies key: ${{ runner.os }}-${{ hashFiles('**/lockfiles') }}

- name: Install Dependencies run: ./install.shHowever this GitHub Actions cache has a number of limitations:

-

total cache size is limited to 10GB for each repository. When this limit is reached, items are evicted from the cache to make place for others. On busy repositories with large caches (

rust,docker,npm, etc.), you can easily reach that size. This leads to far more cache misses, and slower execution times for your workflows. -

slow throughput: on GitHub it’s rare to see throughputs of more than 50MB/s when uploading or restoring a cache. This means your caching steps might take 30s or more for large caches.

Introducing runs-on/cache

Section titled “Introducing runs-on/cache”Our drop-in replacement action runs-on/cache transparently switches the storage backend of your GitHub Actions workflows to an S3 bucket.

Tihs means you only need to change one line:

- uses: actions/cache@v4- uses: runs-on/cache@v4 with: ...All the official options are supported.

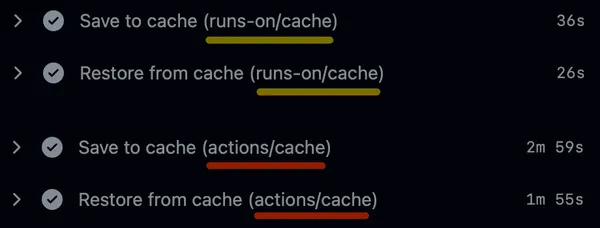

Results (comparison between RunsOn and GitHub for a 5GB cache size):

- ✅ throughput speed between 300MB/s and 500MB/s for large cache items,

- ✅ no limitation on GitHub Actions repository cache size (S3 is by definition infinite).

- ✅ if the action cannot find a valid S3 bucket configuration, it automatically falls back to the official GitHub Actions cache, so you can easily switch runner providers.

The repository is available at runs-on/cache on GitHub.

Using runs-on/cache

Section titled “Using runs-on/cache”From RunsOn

Section titled “From RunsOn”In this case, you just need to change a single line:

- uses: actions/cache@v4- uses: runs-on/cache@v4 with: ...That’s it. All the other options from the official action are available.

From GitHub or another provider

Section titled “From GitHub or another provider”In this case, add a preliminary step to setup your AWS credentials with aws-actions/configure-aws-credentials, and specify the name of your S3 bucket in an environment variable:

- uses: aws-actions/configure-aws-credentials@v4 ...- uses: actions/cache@v4- uses: runs-on/cache@v4 with: ... env: RUNS_ON_S3_BUCKET_CACHE: name-of-your-bucketGotchas

Section titled “Gotchas”If you are already setting AWS credentials in a previous step of your workflow, you might encounter a 403 error, because those credentials will take precedence over the ones coming from the runner EC2 instance profile.

To avoid this, you can unset the credentials in a previous step of your workflow, using the unset-current-credentials option from aws-actions/configure-aws-credentials.

- name: Reset AWS credentials uses: aws-actions/configure-aws-credentials@v4 with: aws-region: ${{ env.RUNS_ON_AWS_REGION }} unset-current-credentials: trueConsiderations

Section titled “Considerations”If you are running this action from RunsOn, an S3 bucket will have been created for you, and runners will automatically get access to it, without any authentication required.

This bucket has the following properties:

- it is local to your RunsOn stack, in the same region, and all the traffic stays within the VPC, via the use of a free S3 gateway VPC endpoint.

- can be accessed without any credential setup from your runners. The bucket name is contained in the

${{ env.RUNS_ON_S3_BUCKET_CACHE }}environment variable. Note that all RunsOn runners have the IAM role to access the S3 bucket. If you require stronger isolation, you can configure multiple RunsOn stacks and use environments to isolate different workflows. - unlimited size, instead of the default 10GB available on GitHub.

- has insane network throughput, at least 3x what you will find on GitHub (100MB/s for default GitHub cache backend vs 300-400MB/s for RunsOn s3 backend).

- can be used as a drop-in replacement for the official

actions/cache@v4action. If the workflow is run outside RunsOn, it will transparently fallback to the default GitHub caching backend. - can be used to cache docker layers.

To avoid the S3 bucket growing indefinitely, a lifecycle rule on the bucket automatically removes items older than 10 days. Cache keys are automatically prefixed with cache/$GITHUB_REPOSITORY/.